Transfer Learning

In this chapter we will have a look at pretrained neural networks and how we can use them.

Pretrained neural networks

Pretrained neural networks are pre-trained models that have been trained on large-scale datasets, typically containing millions of images or more. These models are trained using powerful computing resources and advanced techniques, such as deep learning, to learn hierarchical representations of visual features present in the training data.

When it comes to CNNs, which are widely used for image classification and other computer vision tasks, pretrained models offer several significant advantages:

Feature extraction: Pretrained models are excellent feature extractors. They have learned to recognize a wide range of visual patterns and features, including edges, textures, shapes, and objects. By leveraging a pretrained model, you can benefit from these learned features without having to train a CNN from scratch, which can be time-consuming and computationally expensive.

Transfer learning: Transfer learning is a technique that allows you to use knowledge gained from one task to improve performance on a different, related task. With pretrained models, you can leverage the visual representations learned on a large, generic dataset (e.g., ImageNet) and apply them to your specific task, even if you have a relatively small dataset. This is especially useful when you don't have enough labeled data to train a CNN from scratch, as the pretrained model acts as a starting point, capturing general visual knowledge that can be fine-tuned for your specific task.

Improved generalization: Pretrained models have already learned to generalize well on a large and diverse dataset. They have learned to recognize common visual patterns and can often capture high-level concepts that are transferable across different tasks. By using a pretrained model, you can benefit from this generalization capability, which can help improve the performance of your own models, particularly when you have limited training data.

Reduced training time and computational resources: Training a CNN from scratch can be computationally intensive and time-consuming, especially when dealing with large-scale datasets. By starting with a pretrained model, you can significantly reduce the training time and computational resources required. The initial layers of the pretrained model have already learned low-level features, which you can freeze and use as a fixed feature extractor, while only training the later layers of the network that are task-specific. This approach allows you to achieve good performance with less effort and resources.

State-of-the-art performance: Pretrained models are often trained using the latest techniques and architectures, benefiting from advances in deep learning research. They have achieved state-of-the-art performance on benchmark datasets and tasks. By using a pretrained model, you can leverage this expertise and take advantage of the latest advancements in the field without having to develop and train your own models from scratch.

Three pretrained CNNs

There are a lot of pretrained neural networks, but we will introduce you to three of them.

VGG (Visual Geometry Group):

VGG is a convolutional neural network architecture proposed by the Visual Geometry Group at the University of Oxford.

It gained popularity for its simplicity and effectiveness. The main idea behind VGG is to use a series of smaller convolutional filters (3x3) stacked on top of each other, followed by max-pooling layers.

VGG networks come in different variants, such as VGG16 and VGG19, which indicate the number of layers in the network. These models have a deep architecture, with up to 19 layers.

VGG models are known for their uniform architecture, with only convolutional and pooling layers, followed by fully connected layers at the end. This simplicity makes it easier to understand and implement.

VGG models achieved excellent performance on various image classification tasks, and they have been widely used as a benchmark for evaluating new architectures.

ResNet50 (Residual Network):

ResNet50 is a variant of the ResNet architecture proposed by Microsoft Research.

ResNet introduced the concept of residual learning, which helps address the problem of vanishing gradients in deep networks. The key idea is to use skip connections (also known as identity mappings) that allow the network to learn residual functions, making it easier to optimize deep architectures.

ResNet50 refers to a specific variant of ResNet that has 50 layers, including convolutional layers, pooling layers, and fully connected layers.

ResNet50 demonstrated impressive performance on the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2015, surpassing previous models and significantly reducing the error rate.

The architecture of ResNet50 includes residual blocks with shortcut connections, which allow information to flow directly from earlier layers to later layers, mitigating the vanishing gradients problem and enabling the network to learn more effectively.

MobileNet:

MobileNet is a family of lightweight neural network architectures designed for mobile and embedded devices, where computational resources are limited.

MobileNet models are specifically optimized for efficiency, aiming to strike a balance between accuracy and model size.

The key technique used in MobileNet is depthwise separable convolutions. Instead of applying a standard convolution that operates on all input channels, depthwise separable convolutions split the convolution into a depthwise convolution (operating on individual input channels separately) followed by a pointwise convolution (a 1x1 convolution to combine the outputs).

This separation significantly reduces the number of parameters and computations required, making MobileNet models more lightweight and faster compared to larger architectures like VGG or ResNet.

MobileNet models have been widely adopted in various applications, including real-time object detection and image classification on resource-constrained devices.

In this course we will make use of MobileNet, because it is a very lightweight model and can even be trained on a CPU efficiently.

Creating our own image dataset

We have created an image dataset before in the course. Now we will use that dataset to send these images through a pretrained neural network. Let's just have a look again at the image dataset that we will use:

What is that Normalize function about?

The purpose of normalization is to standardize the pixel values of the images across different channels.

In the context of image data, normalization is commonly performed by subtracting the mean and dividing by the standard deviation. This helps to center the data around zero and scale it to a specific range.

The Normalize transformation in PyTorch takes two arguments: mean and std. These arguments specify the mean and standard deviation values for each channel of the image data. In the code snippet you provided, the mean and std values are set to [0.485, 0.456, 0.406] and [0.229, 0.224, 0.225], respectively.

These values are often precomputed using statistics from a large dataset, such as ImageNet. The specific values used here are commonly used mean and standard deviation values for image data in the ImageNet dataset.

By applying the Normalize transformation, each image channel (red, green, and blue) is normalized independently by subtracting the corresponding mean value and dividing by the corresponding standard deviation value. This helps ensure that the pixel values across different channels have a similar scale and range, which can aid in the training and convergence of neural networks.

Loading pretrained models

Now its time to load a pretrained MobileNet model. We will show you how this can be done with PyTorch.

Assuming you have already loaded and preprocessed the images using the ImageFolder class and defined the data_loader as mentioned earlier, you can proceed to load the pretrained MobileNet model:

Next, you need to set the model to evaluation mode and move it to the appropriate device (CPU or GPU) for computation:

After loading and preparing the model, you can iterate over the images in the data_loader and pass them through the model to obtain predictions:

In the code above, images are moved to the appropriate device using images.to(device). Then, a forward pass is performed through the model using outputs = model(images). The torch.no_grad() context manager is used to disable gradient calculation since you are only doing inference and not training.

What does a pretrained model output?

Now we have seen how we can send an image through a pretrained model. But what will be contained in the output variable? The output variable actually contains a vector of size 1000. This is because usually models are trained on the ImageNet dataset. This dataset contains more than 1 Million images of 1000 classes which are often used to train large convolutional networks like MobileNet. So the output variable actually contains the probabilities for each of these 1000 classes.

How can we train MobileNet on our own dataset?

As our dataset does not have 1000 classes we need to modify the MobileNet model. Do you remember the chart from the previous chapter where we explained that convolutional neural networks can be divided into two parts: a features extractor and the classification head. The feature extractor actually contains the convolution and pooling layers while the classification head contains linear layers that map the output of the last layer of the feature extractor to the class scores.

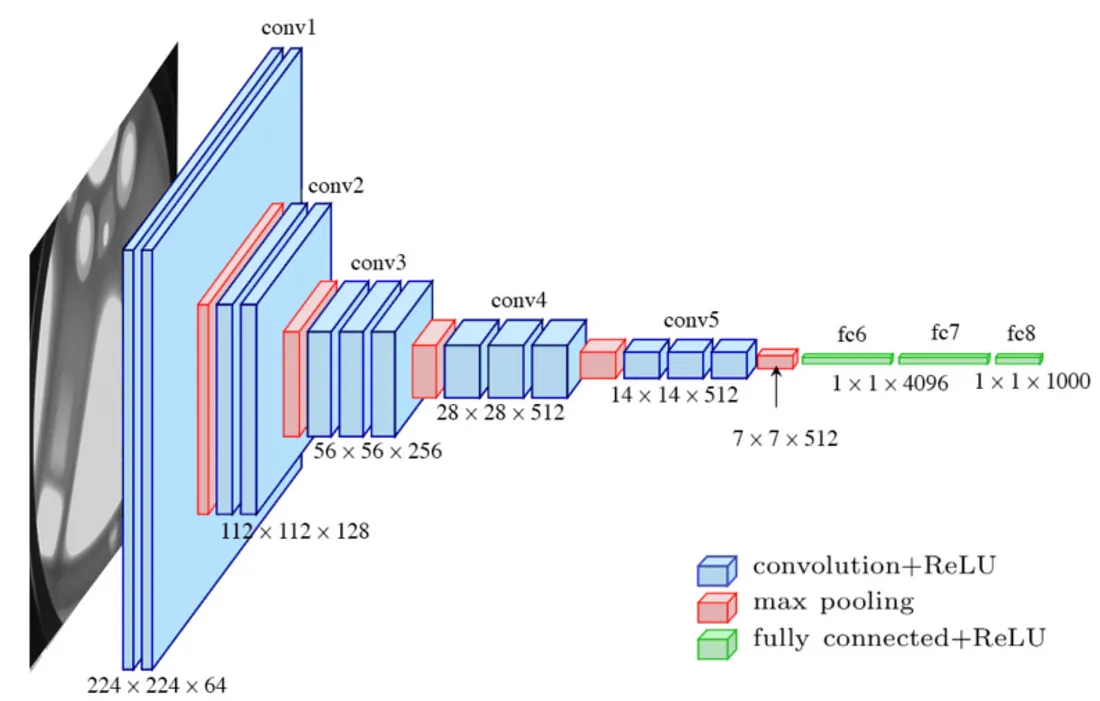

Here is a nice image of the layers of the VGG model which is very similar to our own MNIST model. It just uses a lot more layers.

The blue and red blocks are the convolutional and pooling layers and belong to the feature extractor of the model. The green blocks are the linear layers which map the output of the last pooling layer to the probability scores of the 1000 classes.

Adapting the model to our own dataset

When we want to train the model on our own dataset we just need to replace the classification head of the model. The layers of the feature extractor are not changed at all. This means during training we only need to train the linear layers of the classification head and freeze the weights of the feature extractor. This is very resource friedly as linear layers can also be trained on CPUs very easily.

Why does this work?

The question is, why can we just use the weights of the pretrained feature extractor without training them on our own dataset? The answer is the huge amount of data the model was trained on. When the model saw the more than one million images of the ImageNet dataset it had to learn very useful features in the convolutional layers that allows to model to predict the 1000 classes correctly. As the ImageNet dataset contains a high variety of different images we can assume that the learned features of the model are also useful for our own dataset. The only thing we really need to learn is how the learned features of the pretrained neural network are correlated to our own images. This correlation is computed in the classification head, which is the only part of the model we will train.

But what if the learned features are not useful for my dataset?

Sometimes we have very special datasets like in the area of Biology or Medicine. Most datasets used for pretraining do not contain such iimages why it could be that the model performs poorly on those images although it was pretrained on large datasets. In these cases we also need to train the feature extractor of the model. This is called fine-tuning. Fine-tuning means that the weights of the complete model incluing the feature extractor are updated during the training. As this is significantly more resource intensive than just training the classification head this is usually done on the GPU.

Hands on transfer learning with PyTorch

Now its time to implement transfer learning with PyTorch. We have already seen how we can load a pretrained model and run images through it. Now let's replace the classification head of the MobileNet model.

To replace the classification layers of the MobileNet model with a new classification head for 10 classes, you can follow these steps:

Load the pretrained MobileNet model:

Freeze the weights of the model, so that these weights are not updated during training:

Access the last fully connected layer of the MobileNet model. In this case, MobileNetV2 uses the classifier attribute to refer to the fully connected layer:

The requires_grad attribute of the linear layers that we created are by default set to True, which means they will be the only layers trained during training.

Training the model

Now we are ready to train the model on our own dataset. The following code contains all steps for training, including:

Creating the dataset

Transforming images to standardized tensors

Loading the pretrained model

Frezzing weights of the pretrained model

Replacing the classification head by a new one with 10 classes

Defining the Cross Entropy loss function

Defining the Stochastic Gradient Optimizer

Training Loop

It's pretty straight forward training convolutional networks with PyTorch, isn't it?

Fine-tuning the model

If we want to fine-tune the model we just need to modify the part of the code where we set requires_grad to False. We could introduce a new boolean variable named fine_tuning which will be set to True if the feature extractor should also be trained or False if only the classification head should be trained.